Introduction

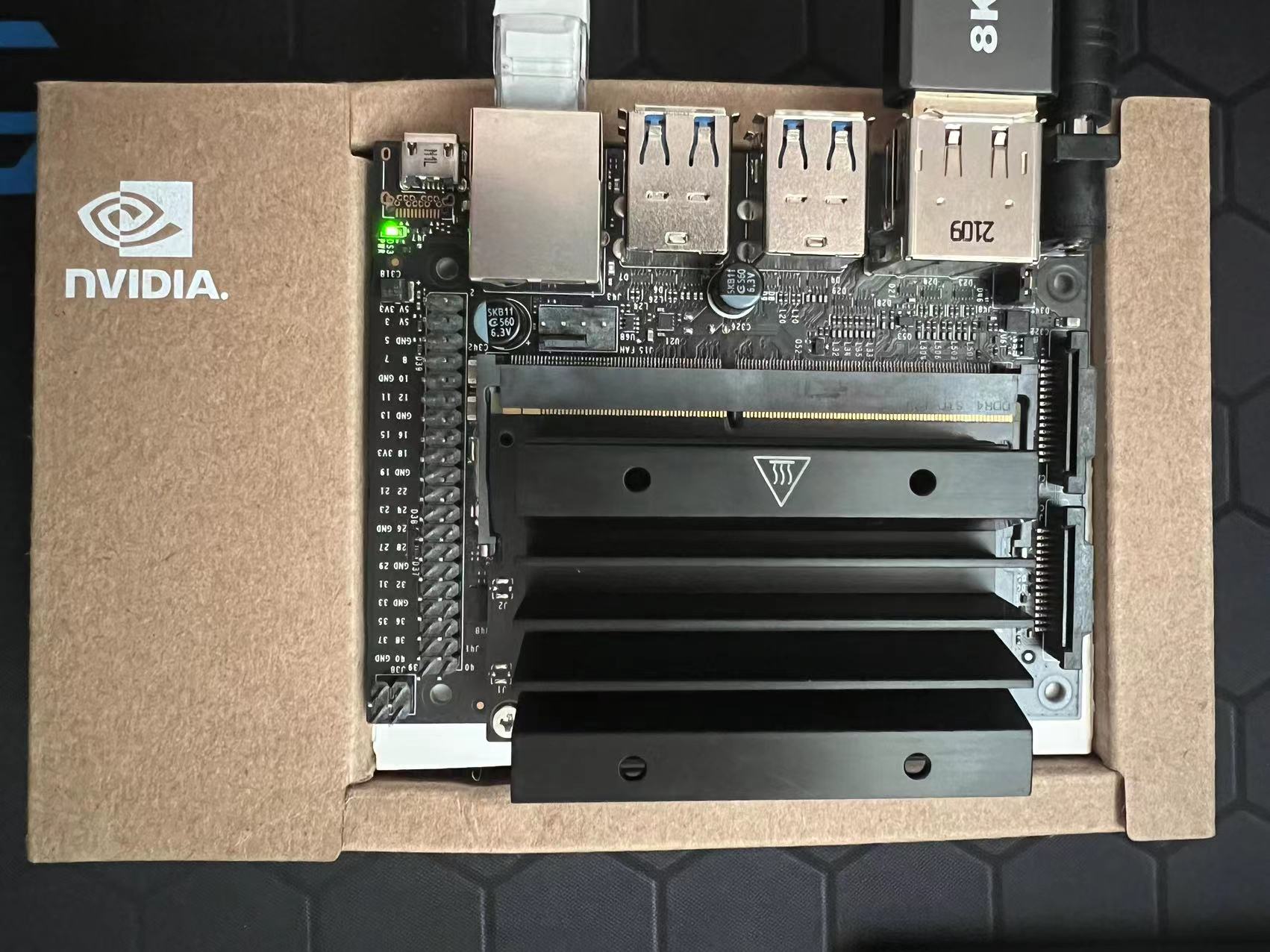

This article primarily documents the process of setting up PaddleOCR from scratch on the NVIDIA Jetson Nano. It includes steps for OS image preparation, VNC configuration, installation of paddlepaddle-gpu, and the performance of PaddleOCR using CUDA and TensorRT. The main purpose is to record the configuration process for easy reference in the future. The Jetson Nano developer kit used in this project is the P3450 model with 4GB RAM.

Power Supply

Although the Jetson Nano supports a 5V/2A Micro USB power supply, some power adapters’ actual output voltage or voltage drop across the cable can lead to insufficient power, causing the Jetson Nano to fail to start properly or encounter unknown errors. Therefore, it is recommended to use a 5V/4A DC Power input, as this not only ensures stable operation but also allows the Jetson Nano to perform at its full capacity. DC-jack power supply can be selected by shorting the DC-jack select jumper.

OS Image Preparation

Here, the image I used is the official Jetson Nano Developer Kit SD Card Image jp-4.6.1 (2022/02/23), which is based on Ubuntu 18.04 and includes CUDA 10.2, cuDNN 8.2, and TensorRT 8.2. You can also browse and download other official images and files from the Jetson Download Center.

I used a 64GB microSD card to install the image. Although the official recommendation is to use balenaEtcher for burning the image to the SD card, I encountered several unknown issues during the process which led to initial boot failures (many similar cases can be found online). Therefore, I switched to Win32DiskImager for burning the image (you need to extract the .img file from the downloaded zip file), and it started up normally.

After successfully burning the image onto the SD card, insert the card into the Jetson Nano’s card slot and connect the power to boot up. Upon starting, you’ll need to perform some basic configurations. Remember to sudo apt update and sudo apt upgrade.

VNC Configuration

The Jetson Nano can be connected to an external monitor, but in most cases, this isn’t necessary, as its primary use is as an edge computing device. We typically access it remotely via SSH or remote desktop.

I tried various VNC servers and XRDP, but ultimately chose Vino-Server due to its simple setup and stable performance. I encountered various issues while configuring XRDP, and its compatibility with Ubuntu 18.04 seems problematic, leading me to abandon it in the end.

Note: The following method is only applicable to the Ubuntu 18.04 system on Jetson Nano. There may be slight variations with other OS.

Let’s start installing and configuring Vino-Server:

$ sudo apt update

$ sudo apt install vino

# set vino login options

$ gsettings set org.gnome.Vino prompt-enabled false

$ gsettings set org.gnome.Vino require-encryption false

Add the network card to the Vino service:

$ nmcli connection show

# you will see UUID of all network cards

# copy the UUID of your currently used network card to replace 'your UUID' in the following command

$ dconf write /org/gnome/settings-daemon/plugins/sharing/vino-server/enabled-connections "['your UUID']"

Start the Vino server:

$ export DISPLAY=:0

$ nohup /usr/lib/vino/vino-server &

Now, the Vino-Server is running. You can use a VNC viewer to connect to the Jetson Nano.

PaddlePaddle-gpu Installation

Environment Preparation

Before installation, we need to check and confirm the versions of CUDA, cuDNN, and TensorRT. The current JetPack version is 4.6.1, corresponding to CUDA version 10.2, cuDNN version 8.2.1, and TensorRT version 8.2.1.

First, we need to set the CUDA environment variables:

$ sudo apt install nano

$ sudo nano ~/.bashrc

# add the following lines to the end of the file .bashrc

$ export CUDA_HOME=/usr/local/cuda-10.2

$ export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

$ export PATH=/usr/local/cuda/bin:$PATH

source the .bashrc file:

$ source ~/.bashrc

Now you can check the CUDA version:

$ nvcc -V

Check the cuDNN version:

$ cat /usr/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

Check the TensorRT version:

$ dpkg -l | grep TensorRT

Install paddlepaddle-gpu

↓↓↓Updated on 2023/07/13↓↓↓

To use PaddleOCR, we first need to install paddlepaddle-gpu. The version to be installed next is 2.5.0. Due to the CPU architecture of the Jetson Nano, we cannot directly install it using pip. There are two methods for installation: one is to compile it yourself, and the other is to use the .whl package provided by the official Paddle team for Jetson. Here, we’ll use the latter, as it’s simpler and more straightforward.

Note: The official

.whlpackage is only for inference, and the training function is not supported.

You can browse and download the latest .whl file from the Official Release Page. For example, you can see the version description Jetpack4.6.1:nv_Jetson-cuda10.2-trt8.2-Nano and package file name paddlepaddle_gpu-2.5.0-cp37-cp37m-linux_aarch64.whl, which means that package is for Jetpack 4.6.1(CUDA-10.2, TensorRT-8.2),the paddlepaddle-gpu version is 2.5.0, the python version is 3.7. You can find the suitable version for your Jetson Nano.

Currently, the python version supported by paddlepaddle-gpu 2.5.x is 3.7, but the default python version on the Jetson Nano (JP-4.6.1) is 3.6. Therefore, we need to install python3.7 first:

$ sudo apt install python3.7

# necessary for paddlepaddle-gpu installation

$ sudo apt install python3.7-dev

$ sudo apt install python3-pip

$ pip3 install virtualenv

To avoid conflicts with the default python3.6, we had better create a virtual environment for python3.7:

$ cd ~

$ virtualenv -p /usr/bin/python3.7 pdl_env

Assuming we have downloaded the .whl fil to the ~ directory, we can install it using pip3:

# activate the virtual environment

$ source pdl_env/bin/activate

# install paddlepaddle-gpu inference library

$ pip3 install paddlepaddle_gpu-2.5.0-cp37-cp37m-linux_aarch64.whl

Verify the installation by importing paddle and printing the version number:

# test.py

import paddle

print(paddle.__version__)

paddle.utils.run_check()

Next, install PaddleOCR, we can use pip3 to install it directly without downloading the whl file:

pip3 install paddleocr

Test PaddleOCR

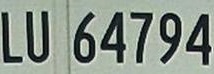

PaddleOCR mainly provides text detection and recognition functions. Here, I did a simple test on text recognition. The test text image is as follow:

The code is as follow:

import paddle

print(paddle.__version__)

from paddleocr import PaddleOCR

ocr = PaddleOCR(warmup=True, use_gpu=False, use_tensorrt=False)

# warm up for 50 times, not calculate the time

for i in range(50):

result = ocr.ocr('text_example.jpg', cls=False, det=False) # recognition only

for line in result:

print(line)

import time

st = time.time()

for i in range(100): # run 100 times per test

result = ocr.ocr('text_example.jpg', cls=False, det=False) # recognition only

for line in result:

print(line)

print(f"Average {(time.time() - st)/100:.3f} seconds.")

The above code will recognize the text in the image, it uses the default lightweight model provided by PaddleOCR.

ocr = PaddleOCR(warmup=True, use_gpu=False, use_tensorrt=False) means that we use the CPU to run the inference model, and the TensorRT acceleration is not used. We can turn on the GPU and TensorRT acceleration by setting use_gpu=True and use_tensorrt=True.

Here is the speed comparison of different configurations, multiple tests were performed and the average time was taken:

| Configuration | Time |

|---|---|

| CPU | ~0.5s |

| GPU | ~0.65s |

| GPU + TensorRT | ~0.03s |

Note: For GPU + TensorRT configuration, the model loading and first run will take a long time, but the subsequent runs will be very fast. TensorRT will optimize the model at the first run.

I also do the same test on my laptop with AMD R9-7940HS CPU (no NVIDIA GPU), the average time is ~0.1s, which is slower than the Jetson Nano with GPU + TensorRT configuration.

As you can see, the TensorRT acceleration is very significant, inference time is ~0.03s. The speed of the GPU + TensorRT configuration is much faster than the CPU configuration.

But the GPU only configuration is slower than the CPU configuration. I guess there may be some reasons: the PaddleOCR model used is lightweight and might be more optimized for CPU; for small models or fewer data, data transfer overhead may be greater than the acceleration brought by GPU computing. Large models or large data should be more suitable for GPU acceleration. But with TensorRT, the GPU acceleration is very significant, that’s quite enough for edge computing.

If Jetson Nano is used for edge computing, TensorRT acceleration is highly recommended.

Hint: If you want to run larger models or data on the Jetson Nano, you may need to increase the swap memory space. It’s recommended to increase the swap memory space to 8GB. You can refer to the following steps to increase the swap memory space:

$ sudo fallocate -l 8G /var/swapfile8G $ sudo chmod 600 /var/swapfile8G $ sudo mkswap /var/swapfile8G $ sudo swapon /var/swapfile8G $ sudo bash -c 'echo "/var/swapfile8G swap swap defaults 0 0" >> /etc/fstab'