Introduction

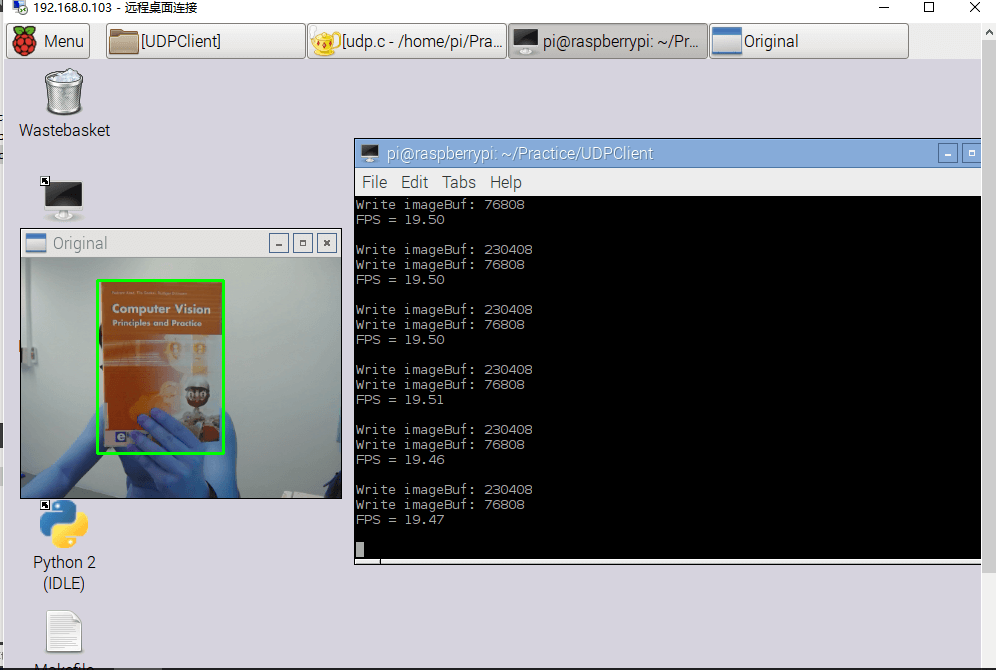

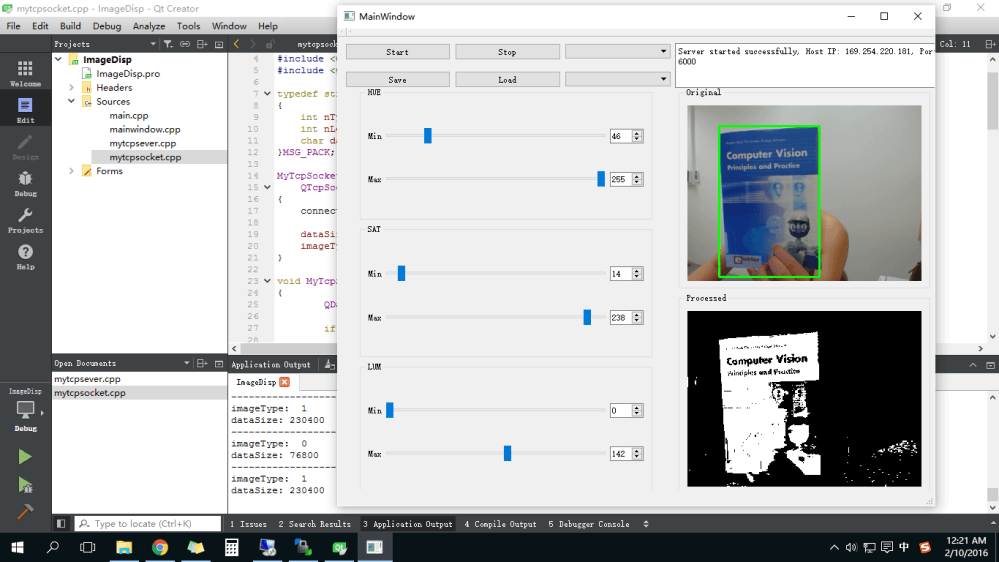

This is a basic vision tuning application. The client device (with camera) records live video/frames and transmits them to the server side. The client side also does simple color thresholding of the frame (based on the HSV color range received from the server) and sends the thresholded image to the server side. The server device gets live frames and thresholded images from the client and shows them on the screen. There are tuning sliders that allow us to alter the HSV color range parameters, and the parameters are immediately supplied to the client for thresholding purposes.

TCP Client

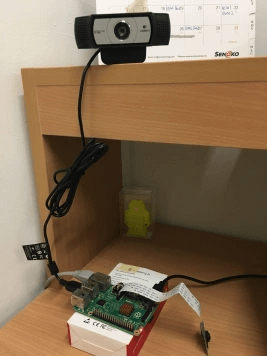

In my case, I use Raspberry Pi 2 with Logitech USB camera, installed OpenCV 3.1.0 (OpenCV 2 is also ok), code is written using C, but you can easily adapted it to any Linux machines (Windows need some minor changes, but logic is same). It can send live video (images) to the server and receive tunning parameters (text) from server side.

We can see from above picture, The program in Raspberry Pi 2 can achieve 19.5 FPS while transmitting video, it’s quite fluent. It will be much faster if we use Pi camera.

TCP Server

The server program is written using Qt C++, because Qt is a cross-platform framework, the server code can build and run on Windows or Linux computers. Qt version 5 is used here.

Key Points

You can get the full code from my GitHub, it should be clear.

- In client side program, to decrease the latency of receiving parameters, a separate thread is created to receive text parameters (not image) from the server, parallel with image transmitting (main thread).

int InitSocket() {

//Create socket

socket_fd = socket(AF_INET, SOCK_STREAM, 0);

//Server information

struct sockaddr_in server_addr;

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_port = htons(SERVER_PORT);

server_addr.sin_addr.s_addr = inet_addr(SERVER_IP);

memset(&(server_addr.sin_zero), 0, 8);

//Connect to server

int res = connect(socket_fd, (struct sockaddr *)&server_addr, sizeof(server_addr));

printf("conncet res is %d\r\n", res);

//Create a thread to receive parameters from server

//main thread is for image sending

pthread_t thread;

if(pthread_create(&thread, NULL, RcvData, &socket_fd)!=0) {

printf("Thread create error \r\n");

}

}

int SendImage(int sock, char *buf, int nLen, int imgeType){

int nRes = 0;

MSG_PACK *msgPack;

char *pSZMsgPack = (char*)malloc(sizeof(MSG_PACK)+nLen);

msgPack = (MSG_PACK *)pSZMsgPack;

msgPack->nImageType = htonl(imgeType);

msgPack->nLen = htonl(nLen);

memcpy(pSZMsgPack+sizeof(MSG_PACK), buf, nLen);

nRes = write(sock, pSZMsgPack, sizeof(MSG_PACK)+nLen);

printf("Write imageBuf: %d\n",nRes);

free(pSZMsgPack);

if(nRes == nLen+sizeof(MSG_PACK))

{

return nLen+sizeof(MSG_PACK);

}

return 0;

}

void *RcvData(void *fd) {

int byte, nType;

unsigned char char_recv[100];

int sock = *((int*)fd);

while(1) {

recv(sock, char_recv,sizeof(int), 0);

nType = (int)char_recv[0];

printf("Recv data 1 : %d \n", nType);

recv(sock, char_recv, sizeof(int), 0);

printf("Recv data 2 : %d \n", char_recv[0]);

if(nType == 0){

if((byte = recv(sock, char_recv, 100, 0)) == -1) {

printf("recv error \r\n");

}

int i;

for(i=0;i<6;i++) {

RecvedData[i] = char_recv[i];

}

printf("recv data: %d %d %d %d %d %d\r\n", char_recv[0], char_recv[1], char_recv[2], char_recv[3], char_recv[4], char_recv[5]);

}

else if(nType == 1) {

printf("Recv Save Command\n");

}

else if(nType == 2) {

printf("Recv Load Command\n");

char buf[6];

buf[0] = 10;

buf[1] = 80;

buf[2] = 50;

buf[3] = 90;

buf[4] = 40;

buf[5] = 90;

int nRes = SendImage(socket_fd, buf,6, 3);

printf("Send Data : %d\n",nRes);

}

}

}

-

In client side program, image format is

IPLImageof OpenCV; in server (Qt) side, image format isQImage. We need to note that channle of IPLImage is order of BGR, but RGB for QImage, so we need to swap R and B channel before sending it. In OpenCV,cvConvertImage()can achieve this function using parameterCV_CVTIMG_SWAP_RB. For grayscale (thresholded) image, there is no need to swap anything. -

I define a data strcture for image data packet, including image type (because both original image and gray image need to be transmitted), length of image and raw data, you can see in the following code.

typedef struct TAG_MSG_PACK {

int nImageType;

int nLen;

char data[];

}MSG_PACK;