Introduction

Recently, I’ve been working on a project that requires edge detection on various components of sneakers. The goal is to automate the measurement of each part for quality assurance (QA) and size categorization. In this blog, I’ll share my experiences from this process.

Edge detection is a common challenge in computer vision, aiming to identify the edges of objects within an image. In traditional computer vision, there are several methods for edge detection, such as the Canny, Sobel, and Laplacian operators, which should be familiar to those who have studied computer vision. These operators rely on image gradients to detect edges. They are fast, but they tend to be sensitive to noise and may not perform well on complex images. In a controlled factory production line environment, where lighting can be optimized, traditional edge detection algorithms often work quite well, especially when there’s a stark contrast between the background and the object. With the rise of deep learning, edge detection algorithms based on this technology have also emerged. These deep learning-based methods are less sensitive to noise and perform better on complex images, but they are generally slower. In this project, I experimented with both traditional computer vision and deep learning-based edge detection algorithms (specifically, Holistically-Nested Edge Detection, or HED). In the following sections, I’ll briefly compare these methods in the context of this project and discuss their respective strengths and weaknesses.

Traditional Edge Detection

Easy Case

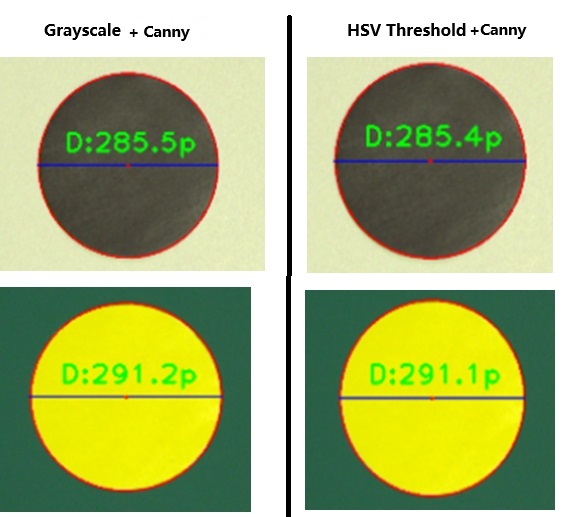

For simple images, such as the one below (used for camera calibration), where there’s a significant color difference between the background and the object and the lighting is also good, any algorithm we use can effectively detect edges (edges are indicated by red lines). On the left side of the image below, we first convert the RGB image to grayscale and then use the Canny operator to detect edges. This is the simplest and most common method. On the right side, the original image is first converted into the HSV color space, followed by some threshold processing to obtain a binary image, and finally, the Canny operator is used for edge detection. The pixel diameters measured by both methods are almost identical. Generally speaking, for a controlled production line environment, the method using HSV space is better because it’s more suited for processing color information. However, the example in the image below is too simplistic to show any difference.

Moderate Case

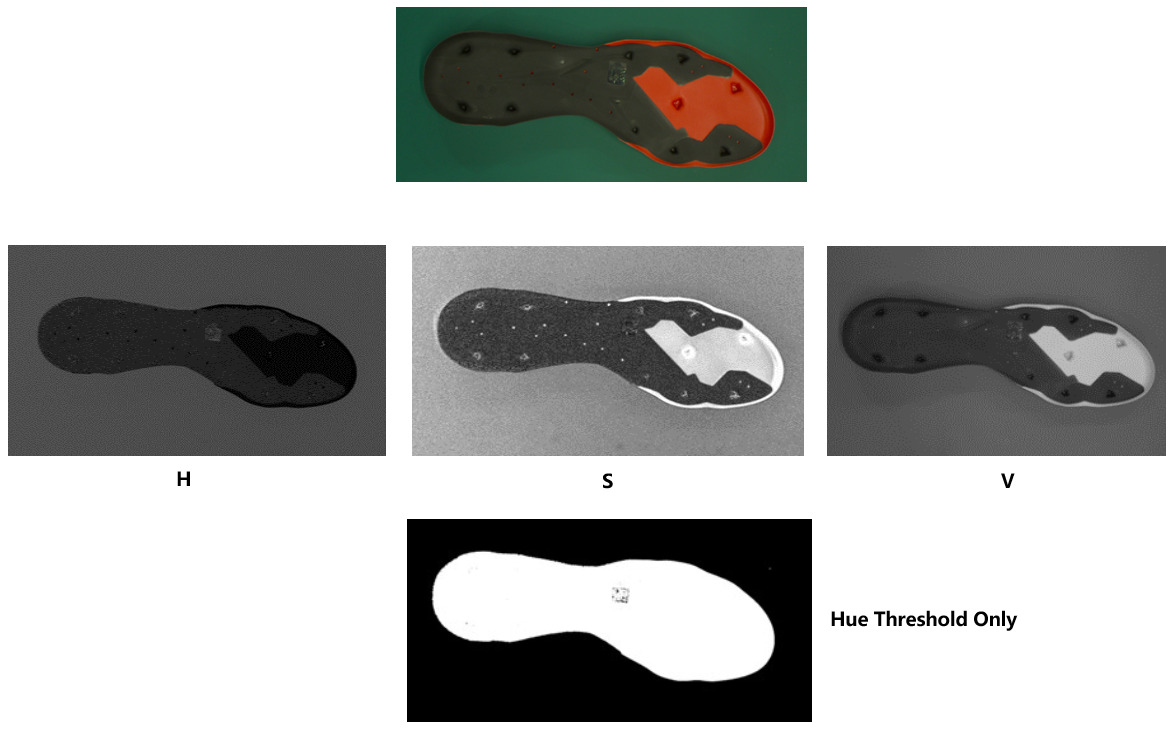

For edge detection of actual shoe components, we need to consider many more factors. The first method shown in the previous image is often not applicable in many scenarios, while the second HSV method can handle most cases with uncomplicated colors, such as the shoe sole shown in the image below. We convert the RGB image into the HSV space and then apply thresholding to the Hue channel to obtain a binary image. As you can see, the Saturation part contains the most noise, so when thresholding, we focus only on the Hue portion. Hue is the color portion of the HSV color model, and Value (also called Brightness) represents the brightness or intensity of the color, which is heavily affected by lighting. By processing in this way, we can obtain a relatively clean binary image, which we then use with the Canny operator for edge detection.

Difficult Case

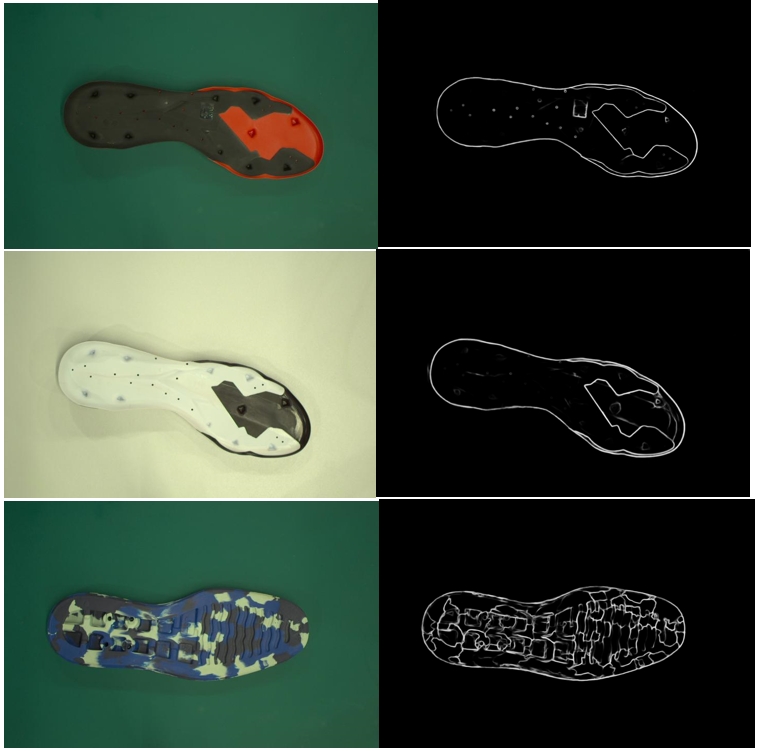

However, the aforementioned HSV method is not suitable for all scenarios. For instance, in the image below, both the shoe sole and the background contain white, making it difficult to process using the HSV method. Even other traditional methods struggle with such cases, resulting in significant edge detection errors.

The following image provides another example. In this case, the shoe sole itself has a complex color scheme, and the colors of the sole and background are somewhat similar. Under such circumstances, traditional methods also struggle to process the image accurately, resulting in less precise edge detection.

Deep Learning Based Edge Detection

Recently, the application of deep learning in image processing has become increasingly widespread, and edge detection is no exception. Deep learning algorithms for edge detection, such as DeepEdge,DeepContour,Holistically-Nested Edge Detection(HED), are all based on CNNs (Convolutional Neural Networks). The basic approach of these algorithms is to first extract features using a pre-trained network and then detect edges with a dedicated edge detection network. The advantage of these algorithms is their insensitivity to noise and their ability to handle complex images effectively. However, they tend to be slower and, for some simple images, like the examples above, their performance may not necessarily surpass that of traditional methods.

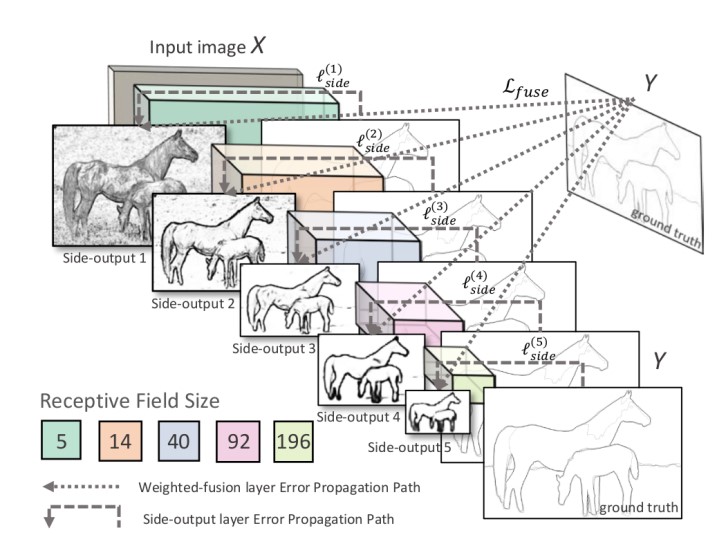

After comparison, I chose the Holistically-Nested Edge Detection (HED) algorithm for its superior performance and relatively fast speed. HED uses VGG16 for feature extraction and has a multi-level structure for edge detection, as illustrated in the figure below. I recommend reading the paper on this algorithm; you will find its design quite ingenious. Here, I won’t delve into the details of the HED algorithm. Interested readers are encouraged to read the paper themselves.

I used this open-source implementation of HED and did not fine-tune the model, instead using the pre-trained model provided by the author. The image below shows the results of using the HED algorithm for edge detection on shoe soles. As you can see, the effect is excellent, with very high precision in edge detection, and the algorithm handles complex images well. However, the downside of the HED algorithm is also clear – it is much slower compared to traditional methods. This speed is unacceptable for real-time detection on factory production lines, unless there is strong GPU support. Without this, the HED algorithm is not suitable for real-time detection in industrial applications.

Currently, the performance of HED is quite impressive. Moving forward, I plan to collect more images data, manually annotate the edges, and then use this data to fine-tune the HED model to see if I can improve its accuracy. Of course, acquiring new GPUs is also essential for this process.

Conclusion

From the comparisons above, it’s evident that the deep learning based HED (Holistically-Nested Edge Detection) algorithm excels in edge detection for shoe components. It is capable of handling more complex images. Although it’s also affected by changes in lighting, its robustness far exceeds that of traditional edge detection algorithms. One issue with HED is that the detected edges are somewhat thick. This is because its edge detection is based on multiple scales, so the final edge is like a superimposition of edge images from various scales. This can affect the precision of the results. However, this issue can be addressed with subsequent image processing techniques to suppress the edge.

However, a significant drawback of the HED algorithm is its slow speed. Therefore, to apply HED in an industrial production line, strong GPU support is essential. Considering the efficient use of computational resources, it might be prudent to use different algorithms for different shoe components on the production line. Traditional edge detection algorithms could be used for simpler images, while HED could be applied to more complex images. This approach would balance speed and accuracy. Of course, fine-tuning the HED for specific edge detection of shoe components is also indispensable.

Through this attempt, I believe that the application of machine learning in computer vision will increasingly grow and improve in industrial production lines.